While many celebrate the advancements of artificial intelligence, there exists a faction adamantly resisting its integration.

Shi Lu, a game concept artist with five years of experience, said that the development of generative AI this year has eclipsed any seen prior in the industry. Numerous game development enterprises are turning to AI, thereby diminishing the demand for human artists. Within mere months, artist fees plummeted from RMB 20,000 (USD 2,780) per piece to RMB 4,000 (USD 556), owing to the swift ascent of AI.

Yet, not every artist is as averse to the advances of AI. Nowadays, certain low- and mid-level artists find themselves in servitude to AI, with clients churning out hundreds of AI-generated character images daily, leaving artists like Shi to refine them.

“Merely two weeks ago, I could still tweak characters’ facial features or expressions,” Shi lamented. “Now, my task is to draw nose hairs and blackheads.” In her intern days, she could conceive game character concepts, yet now she finds each day “more disheartening than the last.” She attributed the plight of artists to AI.

“It’s like cancer,” she said, playing on the linguistic pun that AI shares with cancer in Chinese. In the online sphere, opponents of AI contend that AI-generated art lacks soul, drawing parallels between the preference for it and necrophilia.

They argue that the principle behind AI-generated images is akin to “stitching together mutilated corpses.” Developers inundate AI models with numerous human-created artworks, which the AI then dissects, reassembles, and ultimately generates new images from. Despite refutations from some technical experts, these arguments fall on deaf ears among opponents, who allege that AI’s image creation process often involves copyright infringement.

Major companies advocating for AI also find themselves embroiled in controversy.

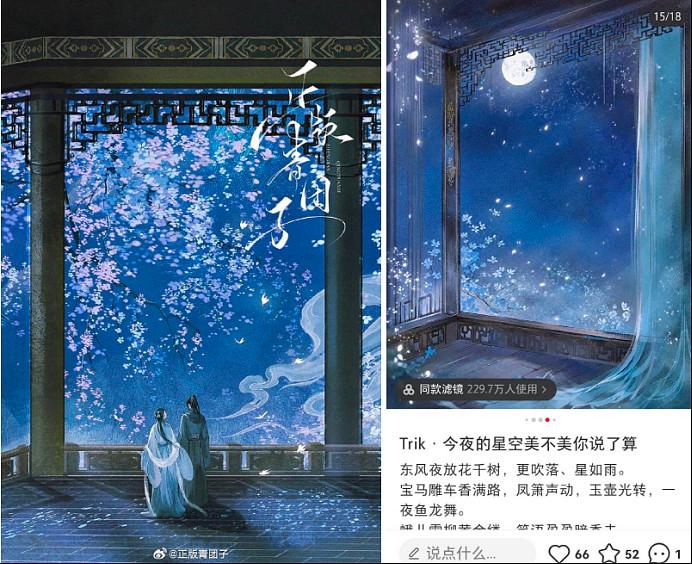

On November 29, 2023, four artists jointly sued Xiaohongshu’s AI model library, alleging infringement. They claimed that Trik AI, Xiaohongshu’s AI image creation tool, illicitly employed their works to train AI models.

One of the artists, aliased “Qing Tuanzi,” shared two illustrations: one of her own, and another that was generated by Trik AI. “The color palettes and visual styles bear striking resemblance to my work, leaving me feeling as though my hard work has been plagiarized,” she wrote. “I implore everyone to unite in defending their rights.”

A fan noted that Trik AI’s “Chinese-style” images “draw inspiration heavily from the works of talented ancient-style artists in China, with AI-generated ancient scenes sharing similar aesthetics.”

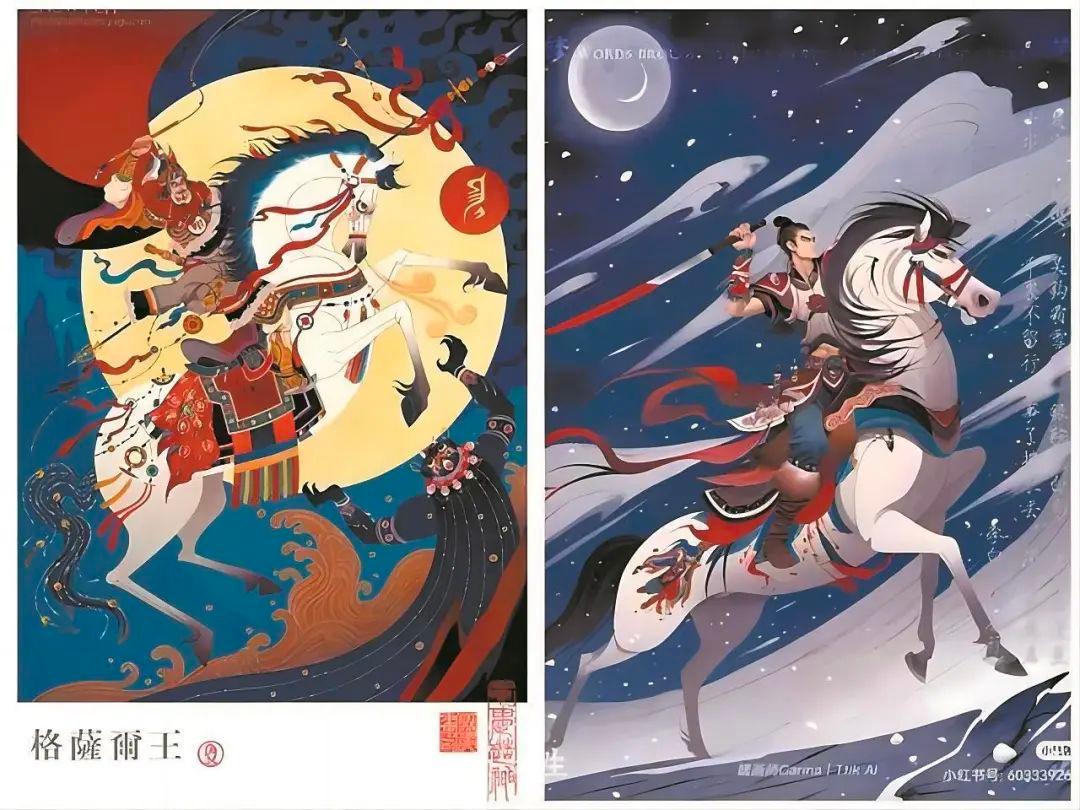

An artist with the alias “It’s Snowfish” juxtaposed images generated by Trik AI with his own works, noting significant similarities, including specific elements. He challenged Xiaohongshu, querying, “Are you content to feed the AI with my creations?”

Subsequently, Snowfish announced his cessation of updates on Xiaohongshu, citing the platform’s unauthorized use of his works to train AI and its seemingly hegemonic user agreement as sources of unease.

The case against Xiaohongshu has been lodged with the Beijing Internet Court, marking the first such case in China pertaining to infringement within AI-generated content training datasets.

Xiaohongshu declined to comment, and Qing Tuanzi rebuffed mediation efforts, opting for legal action instead. She hopes this case establishes a precedent for AI art copyright disputes.

NetEase-backed Lofter is a favored platform for artist-fan interaction. Illustrator Gao Yue frequently shares her works on Lofter, amassing a considerable following. Last year, she noticed the platform testing a new AI feature termed the “Old Pigeon Drawing Machine,” enabling users to generate paintings using keywords. She harbored suspicions that the platform might surreptitiously employ users’ works as AI training data.

“My trust in this platform plummeted instantly, and I was filled with dread,” Gao said.

Observing a growing chorus of dissatisfied users, Lofter officials removed Lofger AI’s post a few days later, asserting that the training data originated from open sources and did not include user data. However, the feature wasn’t immediately taken offline, thus failing to assuage users’ concerns.

After much deliberation, Gao acceded to calls from fellow creators, purging her account before ultimately deleting it in protest. To her, departing from the platform represents one of the few means of self-preservation.

“I refuse to publicly contribute to a behemoth that may one day supplant me,” she said.

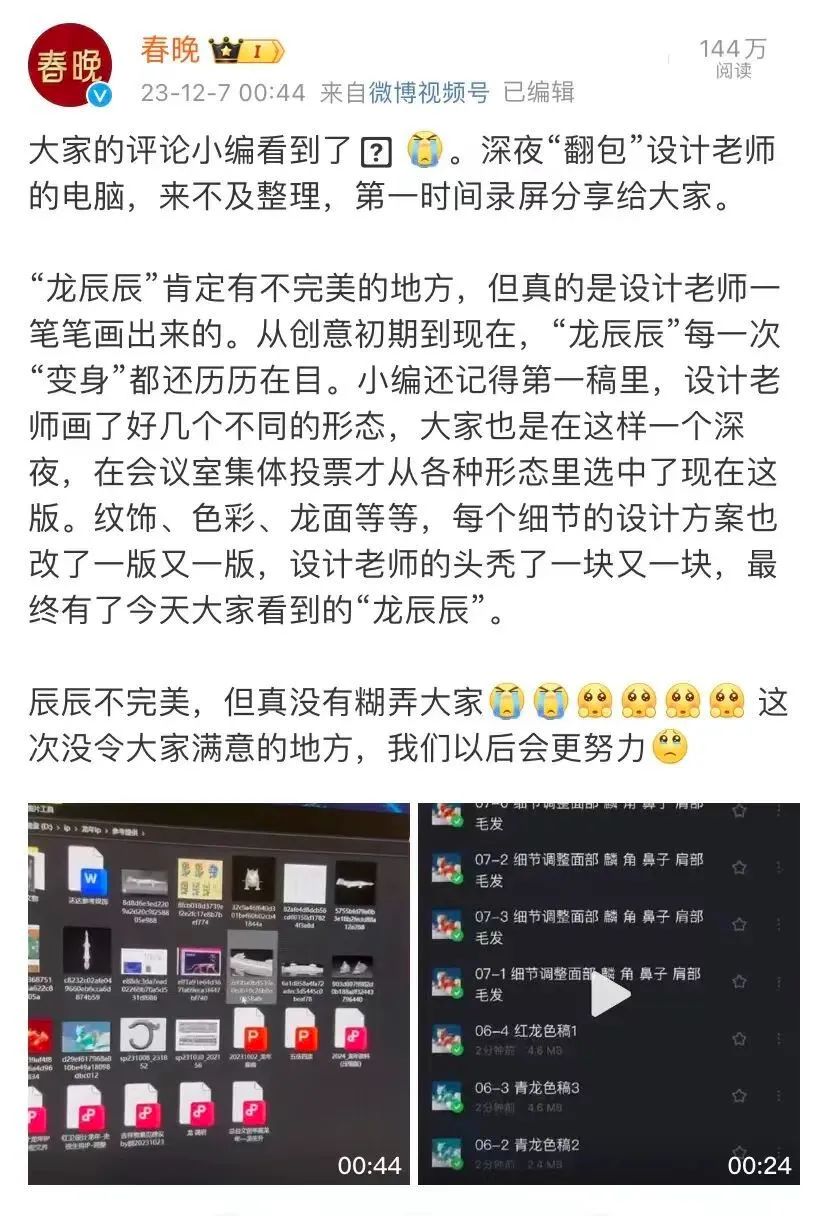

Tensions within the design community continue to escalate. Last month, Long Chenchen, the mascot for the Year of the Dragon Spring Festival Gala, drew criticism for purportedly originating from AI. Each of Long Chenchen’s claws sported a varying number of toes, the mouth awkwardly melded with the nose, and inconsistencies in the depiction of dragon scales further fueled skepticism. The Gala’s response on Weibo, attributing these discrepancies to a designer error, did little to assuage doubts.

Illustrator Ma Qun reminisces about a time when AI drawings were rife with flaws, often distorting humans into canine or equine forms. Despite the astonishing pace of AI advancement, vestiges remained—for instance, AI still grappled with rendering hands.

Unfortunately, these loopholes have rapidly vanished. Ma said that identifying AI increasingly hinges on subjective human sentiments, asserting that AI creations “lack soul” or possess an “inhuman quality.”

She too resists AI, citing its dampening effect on her passion for painting. Lacking a formal art education, she cultivated her painting skills through self-study, driven by fervor. Post-graduation, she embarked on a career as a full-time artist. While the painting process occasionally proved tortuous, the joy derived from witnessing the fruits of her labor eclipsed all hardships.

Now, AI has streamlined much of the painting process, rendering it automated. Consequently, the skills and experience she painstakingly acquired over the years pale in comparison to the efficiency and potency of algorithms.

“Creation has been cheapened,” she lamented to 36Kr.

Throughout 2023, legal battles between creators and AI companies became increasingly common.

In Silicon Valley, fads in technological innovation come and go. Generative AI, however, captured attention swiftly due to its perceived utility, capable of upending established norms and posing a significant threat, particularly to content creators safeguarding copyrights.

Leading the charge against AI was illustrator Karla Ortiz. Confronted by the ubiquity of Stability AI within the painting domain, she was consumed by feelings of exploitation and revulsion. During this period, AI encroached upon several job opportunities, stoking her anxiety until she resolved to take a stand.

Seeking assistance from attorney Matthew Butterick, Ortiz embarked on a legal crusade. In the winter of 2022, Butterick extended legal aid to a cadre of programmers who alleged that Microsoft’s Copilot infringed upon their copyrights. He contended that Copilot’s actions constituted “software piracy on an unprecedented scale,” meticulously preparing for litigation. In January 2023, Butterick represented Ortiz in her case against Stability AI.

Similar lawsuits proliferated. Getty Images sued Stability AI in the US and UK, alleging unauthorized replication and manipulation of 12 million images from its repository. Notable authors such as George R R Martin and Jonathan Franzen filed lawsuits against OpenAI, while a consortium of non-fiction writers targeted both OpenAI and Microsoft. Music labels like Universal Music accused Anthropic of illegal utilization of copyrighted materials in training and unlawful dissemination of lyrics within model-generated content.

On December 27, 2023, The New York Times formally sued Microsoft and OpenAI, asserting that millions of articles from the newspaper were employed as AI training data, placing AI in direct competition with traditional news outlets.

A comprehensive legal battle over AI copyright has commenced, posing challenges to courts worldwide.

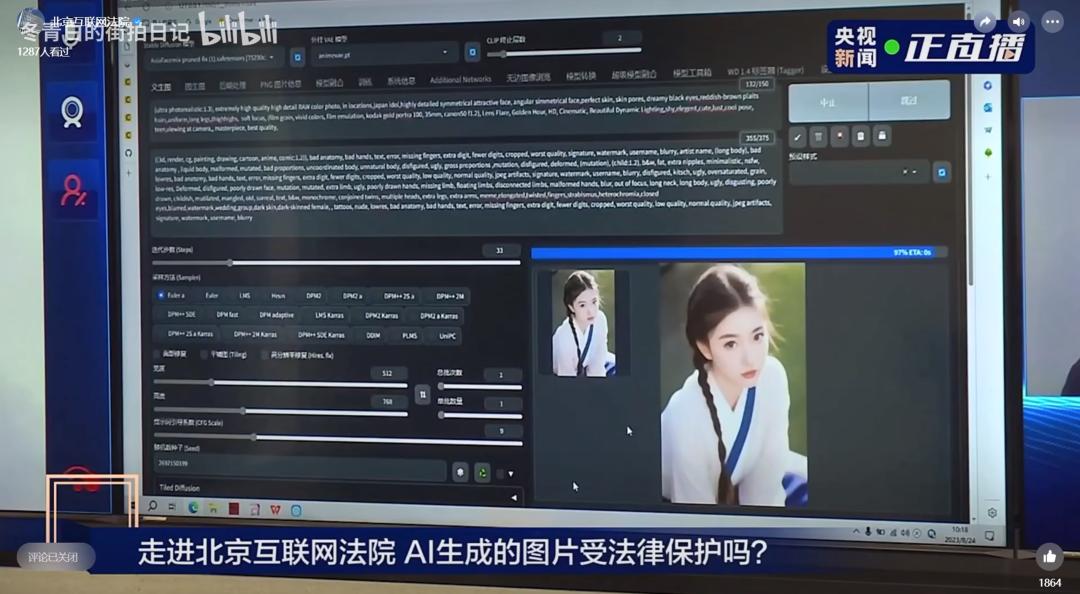

In China, an AI drawing infringement case spanned several months from filing to adjudication, considerably longer than typical image infringement cases.

In February 2023, plaintiff Li Yunkai discovered that his AI-generated image was used without authorization in a Baijiahao article, with his signature watermark conspicuously absent. Baijiahao, a content creation platform operated by Baidu, found itself embroiled in Li’s lawsuit, facing allegations of infringing upon his right to attribution and the right to disseminate information on the internet.

While the case itself wasn’t particularly convoluted, the fact that the infringed image was generated by Stable Diffusion, a popular AI model, attracted significant attention, earning it the moniker “first legal case of AI drawing” among netizens.

Li, an IP lawyer boasting nearly a decade of experience, devoted much of his career to scrutinizing the interplay between new technologies and copyright law. Last September, he delved into the realm of AI drawing tools, experimenting with Stable Diffusion to generate images, which he subsequently shared on Xiaohongshu. Upon discovering his image was featured in a Baijiahao article, he sought clarification regarding the ownership of copyright over AI-generated images and the court’s interpretation thereof.

The defendant, a woman in her fifties or sixties, professed to be ailing severely and expressed bewilderment upon receiving the court summons. During proceedings, she claimed to have sourced the image through an online search, unable to pinpoint its exact origin.

Moreover, she posited that AI drawing epitomized human ingenuity and should not be regarded as the plaintiff’s creation. This contention underscored the crux of the dispute: should AI-generated images qualify as creative works, and does Li possess the copyright?

This remains an unresolved legal quandary across various jurisdictions. In August the previous year, a US court ruled that machine-generated content lacked copyright protection since “only works with human authors can receive copyrights.” However, this ruling was swiftly contested. Critics argued that if a photograph captured using a machine, such as a smartphone, enjoys copyright protection, then an image generated by an AI model should likewise be protected.

The Beijing Internet Court, however, delivered a contradictory ruling.

Presiding over the case, the judge requested Li to meticulously elucidate the entire image generation process employing AI, encompassing software downloads and input prompts. To facilitate the judge’s comprehension of the technology, Li referenced copious materials and endeavored to expound upon the principles and creative processes underlying AI drawing.

Ultimately, the judge ruled that the significant intellectual input in crafting the AI-generated image in question reflected Li’s originality, thus warranting copyright protection.

Li told 36Kr that, while the ruling elicited jubilation among some AI companies in China for extending copyright protection to AI-generated images, apprehensions persisted regarding users’ apparent ownership of copyrights.

On the opposing end of the copyright dispute, AI companies exhibited a stance of indifference. Realistically, the resolution of copyright disputes seems unlikely in the short term. Hence, evading or maintaining silence represents the most judicious approach.

In December 2023, Meitu unveiled a new generation of large models, boasting enhanced video generation capabilities. Although company executives underscored that AI served as an auxiliary tool, not intended to supplant professionals, many practitioners harbored concerns that these new features might eventually imperil their job prospects.

During a Q&A session, 36Kr raised queries regarding potential copyright disputes sparked by AI, inquiring about Meitu’s plans to address them.

“The issue of copyright pertaining to images generated by AI is subject to debate in practical terms and hinges on further legal regulation,” a Meitu executive said. “Despite the current lack of clarity in the legal framework, our overarching stance is to safeguard user copyrights, especially those of professionals.”

Another Meitu executive alluded to the outcome of Li’s case, voicing concurrence with the court’s ruling. “In scenarios akin to the ‘first legal case of AI drawing,’ we maintain that AI companies and AI models do not hold relevant copyrights.”

OpenAI garnered headlines during its developer conference by pledging to cover legal fees for individuals embroiled in lawsuits stemming from GPT usage. Nevertheless, some creators viewed this gesture as provocative.

To a certain extent, AI companies exude confidence. The central argument against suing AI—that AI models infringe upon copyrights when utilizing training data—remains imperfect. Some AI companies equate AI training to human learning, positing that if a fledgling apprentice must learn by studying or emulating their mentor’s work, such actions shouldn’t constitute infringement.

A legal expert speculated that AI companies might invoke the “fair use principle” as a defense. This principle sanctions certain uses of copyrighted material sans permission from or remuneration to the copyright holder, provided the use is equitable. For instance, scholars may quote excerpts from others’ works and incorporate them into their own, authors may publish adapted books, and laypersons may excerpt movie clips for reviews.

In essence, stringent copyright restrictions may stifle creative innovation.

Tech companies have long leveraged this principle to avert copyright disputes. In 2013, Google faced litigation from the Authors Guild for replicating millions of books and posting excerpts online. The court ruled in Google’s favor, citing the fair use principle, contending that it facilitated the creation of a searchable index for public benefit.

In the era of large models, this principle remains pertinent. Proponents argue that the process of generating content with large models scarcely diverges from human creation. Human creativity evolves based on the works of predecessors, and large models are no exception.

In the US, certain judges have voiced support for Meta’s assertions, dismissing claims that text generated by Llama (stylized as “LLaMA”), Meta’s large language model, breaches authors’ copyrights.

The judge said that authors could pursue further appeals, although this would prolong litigation, a scenario beneficial for AI companies. A scholar noted that as AI products gain traction and public acceptance of AI burgeons, courts will need to adjudicate with greater circumspection.

Moreover, the strategic importance and commercial value of AI are on an upward trajectory. AI enthusiasts fret that overly stringent copyright constraints could impede AI development.

Li has conversed with several large model developers who contend that regulatory authorities exhibit prudence, considering not only individual cases but also the trajectory of Chinese AI technological advancement and global competition in AI technology.

Another hurdle lies in the opaque nature of AI companies’ model training data.

An algorithm engineer revealed to 36Kr that approaches to procuring training data are generally unsophisticated, comprising web content crawling via spiders, sourcing open-source datasets, or resorting to purchasing from illicit markets. Some scholars quipped that AI companies, instead of vying for model performance supremacy, ought to compete for the most legitimate training data.

Nevertheless, castigating AI companies for opaque training data may seem somewhat unfair. After all, training data profoundly influences model performance and constitutes proprietary information for each AI company. Under existing legal provisions, AI companies bear no obligation or incentive to divulge training data.

Li told 36Kr that “China lacks an evidence disclosure mechanism,” implying that AI companies can withhold disclosure of model training data. “In this setup, as long as companies withhold such information, it remains inscrutable whether they’ve employed user data for training. It’s a stalemate,” he said.

As of February 2, the case involving the four painters suing Xiaohongshu’s Trik AI remains pending. Li is monitoring this case closely, expressing skepticism regarding the painters’ likelihood of success.

Regrettably, the prospects for creators prevailing in this copyright war appear dim.

Nonetheless, this doesn’t absolve AI companies of all responsibility. Public opinion exerts considerable sway. In November 2023, when Kingsoft Office introduced AI functionalities for public testing, a clause in the product’s privacy policy sparked user ire. It stated that, to enhance AI accuracy, documents uploaded by users would serve as AI training data post-anonymization. This provision elicited widespread discontent among users.

A copyright lawyer speculated that the company’s legal department included the clause to “render it as compliant as possible,” only to inadvertently provoke public backlash once circumventing legal risks. This irony wasn’t lost on observers.

A few days later, Kingsoft Office issued a response, assuring users that “all user documents would remain untouched for AI training purposes,” quelling the furor. CEO Zhang Qingyuan disclosed to LatePost in an interview that the clause was antiquated, intended to enhance Powerpoint formats, and bore no relation to user documents, thereby leading to user misconceptions.

Li said that the definition of AI-related copyright is presently explored solely through judicial practice on a case-by-case basis. “The prevailing consensus is to respect commercial norms; that is, the law will refrain from excessive intervention in enterprises’ autonomous conduct. If IP exists, the general inclination is to assign it to the developer.”

Tencent’s Hunyuan model stipulates in relevant clauses that the copyright of generated content remains with the user but is restricted to “personal learning and entertainment purposes only, precluding any commercial utilization.” Li noted, “Other companies aren’t as generous.”

A compromise that’s gaining traction is for AI companies to devise mechanisms to compensate content creators, akin to Spotify’s remuneration for musicians. If works are utilized as AI training data, creators could receive stipulated fees. In the short term, this would safeguard creators’ interests. As for the long term, it’s premature to speculate.

With 2023 dubbed “the summer of AI,” AI companies have successfully persuaded more individuals, including some content creators, that AI represents the future. Recently, Zhang Lixian, editor-in-chief of the magazine Duku, announced via Weibo that all cover page illustrations for the magazine in 2024 would be AI-generated.

An illustrator mused, “Duku, an ardent proponent of quality, embracing AI drawings is thought-provoking.”

KrASIA Connection features translated and adapted content that was originally published by 36Kr. This article was written by Lin Weixin for 36Kr.